Are you an AI enthusiast looking to optimize your OpenAI model interactions? Look no further! The Prompt Token Counter for OpenAI Models is your ultimate tool for efficient content generation.

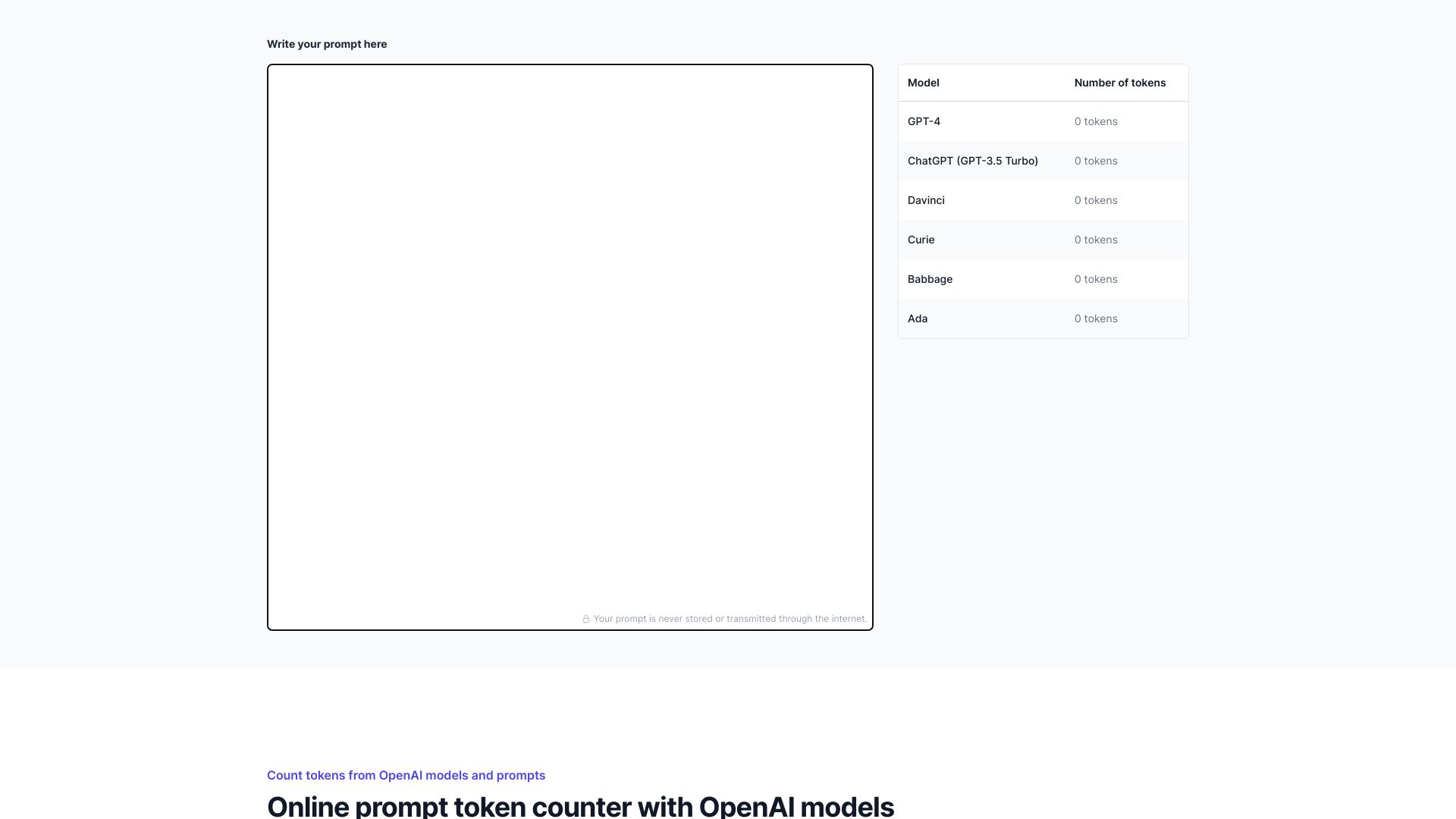

What is Prompt Token Counter for OpenAI Models? This innovative tool is designed to help users manage their token usage when working with OpenAI models. It serves as a crucial resource for anyone looking to craft high-quality, token-efficient content. The value proposition lies in its ability to provide instant token counts for prompts, ensuring that your AI-generated outputs are both effective and cost-effective.

How to use Prompt Token Counter for OpenAI Models? Using the Prompt Token Counter is straightforward. Simply input your prompt, and the tool will instantly display the token count. This allows you to adjust your prompts to stay within the optimal range for your chosen OpenAI model, thus optimizing performance and cost.

Core features of Prompt Token Counter for OpenAI Models?

- Real-time Token Counting: Get immediate feedback on your prompt's token usage.

- User-friendly Interface: Effortless navigation and a simple input field make it accessible for all users.

- Customizable Settings: Tailor the tool to fit your specific needs and preferences.

- Integration Support: Seamlessly integrate with various AI content creation platforms.

Ready to take your AI content to the next level? Try the Prompt Token Counter for OpenAI Models today and experience the benefits of precise token management. Don't let token limitations hold back your creative potential. Start optimizing your prompts now!